The Connector Platform 2.31.0

Index Data

June 2015

Introduction

The Connector Platform is a set of tools for building and running database connectors: gateways that scrape human-facing web-sites and make the results available for searching and retrieval via standard IR protocols such as the much-loved ANSI/NISO Z39.50 and its more web-friendly progeny SRU and SRW.

This is a useful thing to do for lots of reasons, but we had a specific motivation for constructing this platform: we build metasearching solutions (see MasterKey, using as the metasearching kernel our own UI-independent engine Pazpar2. Pazpar2 uses the standard protocols for searching backends: by design, the engine itself knows about a bare minimum number of ways to do searching, because the focus of that particular software component is not on communications but on very fast, highly parallel searching, merging, deduplication and sorting.

So in order for Pazpar2, and therefore Masterkey, to be able to search the widest possible selection of resources, we need a way to make connectors to those resources that will present them in a form that Pazpar2 can search. Because the connectors provide standards-compliant interfaces, they are also useful in other contexts: among other possibilities, they can be used by other vendors' metasearch solutions.

Architecture

The model for connectors was thrashed out in a series on informal meetings in Montreal, and so has come to be referred to as the Montreal Model. It's a purely conceptual model, quite independent of how connectors happen to be stored and transmitted: it's about what kind of thing they are, not how they're represented. (But don't worry, there is also a concrete representation, which we'll show you at the end of this page.)

The model makes a clear distinction between three concepts: Connectors, Tasks and Steps.

Connector

A connector is a complete, self-contained specification for how to use a web-site for searching and retrieval. There is nothing about a connector that ties is particularly to the Z39.50 or SRU protocols: it described only the interaction with the back end, leaving it to other software to control how it is invoked.

A connector contains three things:

Metadata: a set of key=value pairs which contain information about the connector: for example, its title, author and date. These are not needed when running the connector, but are important for administering them, especially within the Repository.

Properties: a separate set of key=value pairs that are distinguished from the metadata in two ways. First, they influence the running connector; and second, their values may be arbitrarily complex structures, whereas those of metadata are always simple strings. For example, while the

block_cssproperty is a boolean, and can take only the valuestrueandfalse, thewhitelistproperty has as its value a list of Internet domains.Tasks. The meat of a connector is the set of tasks that it provides, which together provide its functionality. They are discussed in more detail in the next section.

Task

When a user searches on a web-site, or an application such as a metasearch engine searches on a user's behalf, the whole search-and-retrieve process typically consists of several separate tasks.

Tasks come in four types, described in more detail below (init, search, parse and next), but a connector need not have exactly one of each. Many connectors -- such as those for publicly available sites like Google and Wikipedia -- will not need an init task at all; and some connectors will provide multiple instances of the search task. For example, if a simple keyword search is submitted, then a search task that expects to use only the keyword argument will be invoked; but if author and title are specific as separate parts of a query, then a search task that uses the author and title arguments will be invoked instead, if one exists. Such a task would probably use the back-end web site's Advanced Search page.

init

Some web-sites require a user to authenticate before being allowed to search. For these, a separate initialization step is required before the searches are submitted. Higher-level logic, such as the Z39.50/SRU-to-CFEngine gateway, must determines whether or not this task needs to be invoked at the start of each session, using application-level criteria: for example, a Z39.50 session might cause a connector's init task to be run if authentication information is included in the Z39.50 InitRequest.

init tasks typically navigate to a login page, copy task parameters such as username and password into appropriate fields on that page, and submit the form. This generally results in the server issuing a cookie which allows access to the main site.

search

Every connector needs one or more search tasks. Their job is to load the web-site's appropriate search page (which may be either a Basic or Advanced Search page depending on which arguments are provided), fill in the form, submit it, and extract a hit-count from the result page.

parse

The purpose of the parse task is to recognize the parts of a result page that contain records, and extract field from them: author, title, date, etc. -- whatever is available. In general, this is the most complex part of a connector.

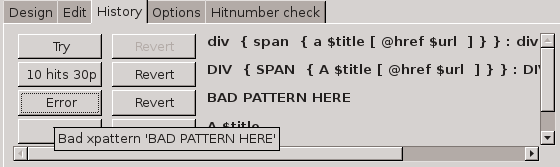

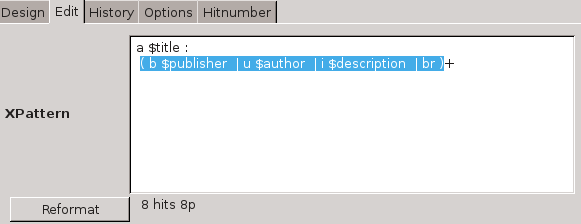

The two main approaches that the Connector Platform supports are parsing by regular expression matching on the HTML, or using XPattern, an XPath-like language that matches sequences of elements in the DOM tree. Helpers exist for constructing XPatterns automatically, and this is the preferred approach for sites whose HTML is sufficiently well structured to support it.

In general, the data extracted by the parsers requires post-processing to make it usable: for example, extraneous text often needs to be stripped out of field, whitespace needs trimming, dates need normalizing, URLs need to be made to work from outside the originating site, etc. The Platform provides facilities for these kinds of transformations, as well as a general-purpose regular-expression transformer. These are described in detail in the reference portion of this manual.

next

Most web-sites do not present all the results of a search on a single page. This would obviously be prohibitive in cases where many results are found -- for example, at the time of writing Google has 575,000,000 hits for the search "water". Accordingly, connectors must provide a next task which can navigate from one page of results to the next: the higher-level code that runs the connector will invoke this task, alternating with the parse task, as many times as necessary to fetch the number of records required by the application.

In general, then, the sequence of task invocations in a session is as follows:

- init

- search 1

- parse 1

- next 1, parse 2

- next 2, parse 3

- [more next/parse pairs as needed]

- search 2

- parse 1

- next 1, parse 2

- next 2, parse 3

- [etc.]

- [more searches as needed]

- [parse and next/parse pairs as needed after each search]

Each task consists of three things: a sequence of steps, a set of named test arguments, and optionally a group of tests. These are now described in more detail.

Tasks contain steps

Most fundamentally, a task consists of a sequence of steps, which are run in order, and which together fulfill the task. In general the steps are run in order from first to last, but there are a few ways in which that order can be tweaked:

Each step in a task can be marked as a an Alt step; this means that it gets run if and only if the step immediately before it failed - for example, if a step that tries to extract the value of a particular part of a results page can't do so because its XPath doesn't match anything in the document. Usually, such failures cause the whole task to fail, but alt steps provide a recovery mechanism for such situations. A common use is setting the hit-count to zero in a

searchtask when the part of the document that's supposed to say "Showing hits 1-10 of 3,456" is not present.Because connectors are working with web-sites, and because web-sites are complex things sitting on the other side of a global network, some operations don't always work they way they should. Occasionally a step will fail for transitory reasons -- for example, a result page appears to have loaded when in fact it is not complete, but will be in a few more seconds. To cope with situations like this, the Platform provides a Retry step, which can be placed after a step that might fail. It specifies how many times to retry the failed operation before giving up, and how long to wait between tries.

While alt steps and the Retry step are both ways to recover from errors, the Next If step provides rudimentary control flow based on the values of arguments. Unlike Retry, it appears before the step it controls, and says to run that step only if a condition is satisfied: that a particular argument matches a specified value.

See the reference manual for more on the Retry and Next If steps.

Tasks contain test arguments

While developing a connector, it's often useful to run either a whole task, or one of the task's steps in isolation. The behavior of the tasks and steps in a running connector depends on the arguments that are submitted with the service request (e.g. the database name and the various parts of the query, for a search request). To emulate this when testing tasks and steps within the builder, some values are needed for these arguments. For this reason, test arguments can be specified, and are saved as part of the task.

Note that the values of these arguments do not affect the behavior of the connector when providing a service by running under the Engine. At that time, the test arguments that were provided for the benefit of the builder are ignored, and the values sent as part of the service request are used instead.

Tasks contain tests

Finally, tasks may contain tests. Each test consists of running the task with the supplied arguments, then making assertions regarding the results generated -- for example, that that hit-count is a non-negative integer. Since the testing facilities are under active development at the time of writing, and liable to change, they will not be described in detail.

Step

The last concept in the model is that of a step. Although tasks also carry test arguments and tests, these are accessories: the core of a task is the sequence of steps that it contains, and it is only these that affect the actual functioning of the task when is run in the Engine.

A step has a type, chosen from a finite set of (at the time of writing) seventeen. The type can be thought as the operation code of the instruction that runs on the domain-specific virtual machine that is the Engine. We frequently refer informally to step types simply as steps, as in "Oh, use a Parse by Xpattern step for that".

Some steps are appropriate for init and search tasks, some for parse or next, and some are applicable everywhere. Analyzing a pool of extant connectors shows the following patterns of usage:

inittasks use steps: Click, Open URL, Extract regex, Retry and Set form value.searchtasks use steps: Click, Open URL, Normalize URL, Parameterized result, Extract regex, Retry, Set preference, Constant result, Set form value, Next if, Submit form, and Transform result.parsetasks use steps: Click, Join result, Open URL, Normalize date, Normalize URL, Parse by regex, Parse by Xpattern, Retry, Split result, and Transform result.nexttasks use steps: Click, Extract regex, Retry, Set preference and Constant result.

It's apparent that the very general Click and Retry steps are used in all four tasks, and Extract regex in all but the parse task, while more specific steps such as Parse by Xpattern and Normalize date are used only in the parse task.

Step configuration

Each step carries its own configuration; and the type of the configuration varies by step type. For example, an Open URL step's configuration consists either of the URL to go to, or the name of an argument that contains the URL to go to. At the other end of the complexity scale, the Transform step is configured by the name of a result-list to act on, a result within that list to use as input, a regular expression to apply to the value that result, a string to substitute for the portion that matches the regular expression, and the name of a result within the list to write the result of the substitution back to. (This sounds more complicated than it is -- stay with it, it will all make sense when the time comes.)

The different configuration parameters of each step type are surveyed in the reference section of this manual.

XML Representation

The structure of connectors, as described here, lends itself nicely to a simple expression in XML. The XML format used is described in a separate section. The Relax-NG specification for that XML format can be thought of as a formalization of the description on this page.

Components

Builder

The Connector Builder is implemented as an extension for the Firefox web-browser, and requires a version in the 3.x series. Because of its tight integration with the browser, porting the Builder to IE, Sarafi, Chrome or Opera would be a significant undertaking -- most likely not possible at all for some of these browsers, as they do not all make the same facilities available to extensions.

There are two ways to install the Builder: most users will install from a .xpi file, the standard packaging for Firefox extensions. The most recent release of this can be obtained from Index Data -- email us for details. Developers and others who need the bleeding-edge version of the Builder can check out the source code and modify their Firefox configuration by hand to run the extension directly from the source. This is done by adding a file named cfbuilder@indexdata.com to the extension directory, containing only a single line that specifies the directory holding the Builder source-code: for example /usr/local/src/git/cf/builder (depending on where the source was checked out). The location of the extension directory is different on different platforms -- for example, on MacOS X, the global extensions area is /Applications/Firefox.app/Contents/MacOS/extensions/

When running Firefox in a configuration that includes the Builder extension, the browser can be used normally, invoking the Builder only when required. It can be brought up by choosing View → Sidebar → Connector Framework: Builder.

When the Builder is active, the browser is split into two vertical columns. On the left is the Builder; the right column contains the old contents of the browser window. Its contents are unchanged, and all the navigation buttons (Back, Forwards, Reload, Home, etc.) work as usual on it. The Builder does not impinge on the normal use of the browser.

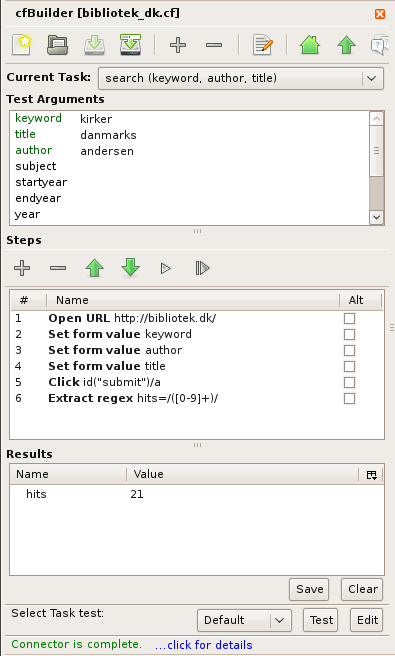

The builder itself is split vertically into three main areas: at the top, and area concerned with the current task; below that, a list of the steps that make up that task; and below that, a display of results generated by the last connector run. Slider bars between these sections adjust the amount of vertical space allotted.

In more detail, top from to bottom, the Builder presents:

A title bar showing the name cfBuilder and a close button.

A toolbar containing buttons to create a new connector, load an existing connector, save the current connector or save it as a new file, add a task, remove a task, edit the connector's metadata, go to the connector repository's administrative home, or upload the current connector to the repository. These operations will be discussed in more detail below.

A dropdown for selecting which task to work on. Nearly all connectors have Search, Parse and Next tasks; others can be added, notably Init.

A list of the selected task's arguments. For example, Search tasks have arguments for the various fields they might search, and Init tasks have arguments for the username and password that can be used in authentication. When a connector is run as part of a searching solution, values for these arguments are submitted at run-time (e.g. the query terms); the Builder allows example values to be saved, for use when running the connector while developing it.

A toolbar containing buttons that relate to steps within the selected task: with these, it is possible to add a step after the currently selected one, delete the current step, move it up or down the list, run the current step in isolation, or run the whole sequence of step that make up the task. These operations will be discussed in more detail below.

The list of the actual steps, showing for each step its type (in bold), a summary of its configuration (in normal font), and an indication of whether or not it is an Alt step (i.e. one that is run when and only when the step before it fails).

An area containing the results generated by running steps of the selected task. For Search tasks, this is typically a single number, the hit-count; for Parse tasks, it is a list of records, each containing multiple fields.

An area for creating and running tests for the current task. At present, this requires providing paths through the result structure and regular expressions that the contents of those paths must match.

Finally, a status line indicates whether or not the connector is considered complete, and if not provides a link to a popup that shows which tasks are missing.

Engine

The Connector Engine (documented here) is a C++ based library that embeds the Gecko layout engine which underpins Firefox, Thunderbird and Firefox OS. It exposes a binding to the same core Javascript code for loading and running connectors that the builder uses. Several tools build on this library:

- CFWS is a webservice for running connectors. It's implemented as a Metaproxy plugin.

- cfrun is a standalone binary. Convenient for running connectors at the command line, in shell scripts, and cron jobs.

- cfzserver is a more specialised Metaproxy plugin that exposes a Z39.50 interface to connectors based on our search template.

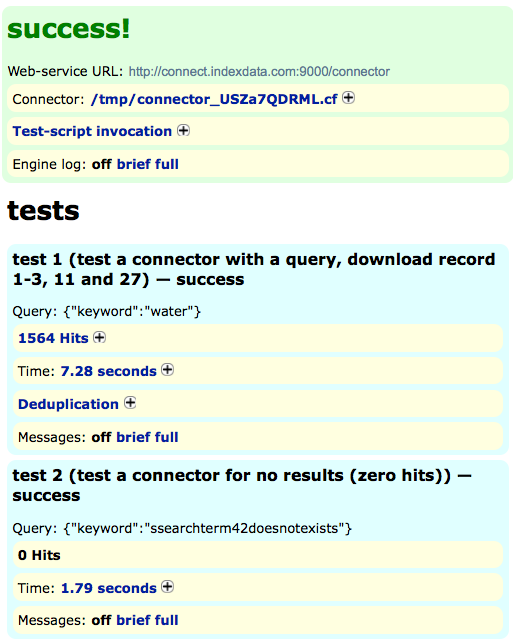

Repository

The Connector Repository (documented here) is an administrative tool used to manage all connectors available for use, development or enhancement via Index Data’s Connector Platform.

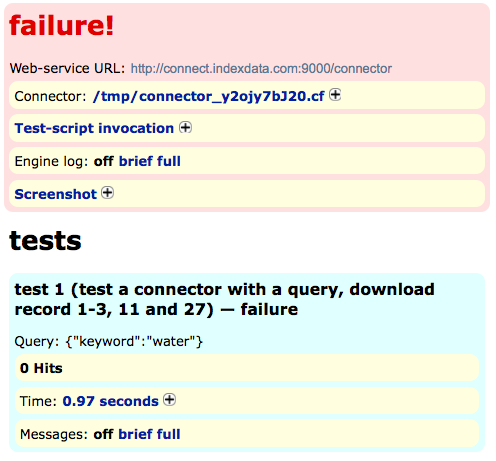

The repository presents a single, unified interface for all connectors, including those in production, in development, or not currently in use. It also features automated testing for all existing connectors, as well as an integrated display of reliability statistics drawn from actual production usage.

Data Model

The basic interface

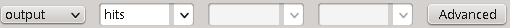

In many steps you will see an interface like this. It's for specifying where to store or retrieve a value.

The several drop down boxes each mean different things, but all of them are still quite related as you may find out in the advanced section

First drop-down: Container

In the first box you can choose from a set of containers for storing and retrieving values:

- input: arguments passed to the Task

- output: returned on Task completion

- session: values that you wish to be available in all Tasks (not reset between runs)

- system: runtime values, currently only has a

locationkey containing the current URL - temp: starts empty every time and is never returned

The data browser at the bottom left of the builder reflects the values in each container as they were at the end of the last Task execution.

Second drop-down -> Keys

You can choose from a list of keys (names for the value) that were defined in the Template, or you can name values as you please.

Generally the Template will provide all the names which external programs using the connector will expect in the output container and all the input they may pass in.

Third and fourth drop-downs -> Records

Some templates may specify a key which refers to a list of records. In the search template that ships in our default configuration there is only one: "results". The third drop down allows you to apply a step to a particular field in every record within such a key.

The fourth and final dropdown is for lists within each record. The search template defines the key "item" here to refer to holdings records containing the location, availability, etc.

Record lists are currently only populated by the XPattern step.

Appending, Concatenating and Replacing

When configuring the target for a step to write to you will notice three radio buttons providing options for how to handle any values already at that key.

- append: leave existing values and add the new one to the end of the list

- concatenate: append the new value to the end of the string in the target key

- replace: replace any existing value with the one generated by the step. If the step would produce an empty result the key will be deleted.

In-line value replacement.

Many steps will accept a reference that will be replaced with whatever data is currently in the specified location. To distinguish this from the rest of the string you use the unlikely {$ combination, like so: {$.container.key}. For example, you could configure Go to URL with a URL of "http://www.site.tld/sections/{$.input.database}" and the last part of the URL would be replaced by the parameter passed in as "database" when the Task is run. This shorthand will only take the first value in any given array. To display all values (for example, a list of authors delimited by semicolons), use the Join step to concatenate them together into one string in the temp container.

Advanced mode

Behind the scenes the steps find the values to run with by generating a JSONpath query from your step configuration. When you click on the "Advanced" button it switches to an interface that expresses your setting as a JSONpath that matches a set of objects and the key to operate on.

Each instance of a Task contains a data object with properties that store the input, output, temp objects for the current invocation. The data object also contains references to the session and system objects stored within the current Connector instance so that they persist for the lifetime of the Connector session.

The basic interface only applies to structures like the ones described in the search template. At the top level, each space (input, session, etc.) is an object where each key contains an array. This allows for multiple values to be stored and for consistency with the record contents. All steps operate on arrays. The only exception is that, for compatibility reasons, Tasks can still accept scalar properties in input.

If, instead of scalars, an array property contains objects, it is treated as a list of records. The only step that typically will output an array of objects into a key instead of an array of scalars is XPattern. Each object in this array has several array properties. For example, a record representing a book would have an author property containing an array of strings containing the names of all authors of that work.

Here in JSON notation is an example with structures typical of search template data:

{

"input" : {

"keyword" : [ "water" ]

},

"output" : {

"somevalues" : [ "one", "two", "three" ],

"results" : [

{

"title" : [ "First Result" ],

"author" : [ "Bob", "Jane" ]

},

{

"title" : [ "This is My Last Result" ],

"author" : [ "J. Shaddix" ],

"item" : [

{

"location" : [ "Library of Alexandria" ],

"callno" : [ 5555 ],

"availability" : [ "On Hold" ]

},

{

"location" : [ "Library of Alexandria" ],

"callno" : [ 5555 ],

"availability" : [ "On Hold" ]

},

{

"location" : [ "Libraria Lello" ],

"callno" : [ 42 ],

"availability" : [ "Missing" ]

}

]

}

]

},

"temp" : { },

"session" : {

"proxyip" : [ "127.0.0.1" ]

},

"system" : {

"location" : [ "http://library.tld/catalog.cgi" ]

}

}Steps access the data using the JSONpath query language. The data object is passed along with the path specified in the advanced interface or generated from the basic interface. During step execution this path is used to fetch objects to operate on. A separate field in the advanced interface specifies which key of each object is used. This layer of indirection is necessary as JSONpath only returns the matching values; no context is provided. Because there is no way to pass a reference to a scalar it would be impossible to modify a set of matching strings. Objects and arrays are passed by reference and so we collect a set of objects and modify the values within the key. While we could modify the arrays directly, we would be unable to add or remove the key from the parent object.

Generally speaking you can use arbitrary JSONpaths to select objects in steps. However, if a step is to output the result to a different path than the source, there must be exactly the same number of objects matching the destination path.

Detailed documentation of JSONpath is available from the author. Here are some common examples of how one might use some of its features with Connector Platform data:

Top level values

Access the hits value in the output space:

Path: $.output Key: hits

The path selects the object matching $.output ($, the root of the path, is the current task's data property). The step will then operate on the array in the hits key of that object.

Record field

Operate on the author of every record in the results keyword:

Path: $.output.results[*] Key: author

[*] indicates that it should select all items within the $.output.results array. Those items are the record objects from which the step obtains the author value.

Choosing among records---JSONpath filtering

Rather than use * to select every value in an array you can instead choose a subset of the objects to work with by including a Javascript expression wrapped in ?(...). This is evaluated against each element and only those where the expression returns true are included in the result. Within the expression you can use the @ symbol to refer to the current element.

As an example, you might use a Copy step to copy the "abstract" field into "description" for records that have no description. To do this you would use a JSONpath of $.output.results[?(!@.description)] and a key of abstract in the source and description in the destination.

Perhaps you want to delete journal title fields starting with "N/A". Or run pretty much any step on some set of records based on nearly any criteria. JSONpath, while awkward at times, can usually pull it off.

Core features

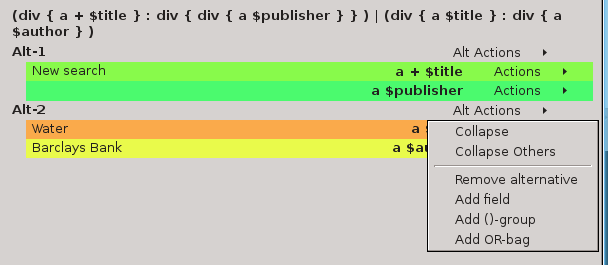

Alt steps

In the builder, to the right of the Steps list, is a column of checkboxes labelled "Alt". These allow you to mark a step as an alternative, to run in case the preceding step fails. Alternatives will be tried in succession. For instance, the second alternative will only run if the first alternative ran and failed. If the second alternative also fails, then the third alternative will run, and so on.

To run multiple steps on failure see [Try / Catch](#steps-try_catch].

Page Load Detection

Sometimes you don't want steps to fire in rapid succession. If you use the Go to URL step to open a web page and proceed to use Set form value, it needs to finish loading the page before it can access the form element in order to fill in the value. Were it to try beforehand, it would fail with a "destination element not found" error. To handle this situation, steps such as Click, Go to URL, and Submit offer a checkbox marked Wait for page load?. If clicked, task execution will not proceed to the next step until the web page has finished loading.

Wait configuration

Usually just ticking the box will handle most needs but there are more settings available from the "Configure load detection..." button. You can set the amount of time to wait (in milliseconds) before the system gives up on waiting and proceeds with the next step. The default is 30000 (30 seconds).

The other option available is to select the mechanism by which page load is detected, each of which may expose further configuration. A detailed description of these methods follows. However, such information should only be necessary for debugging the thorniest of connectors.

nsIWebProgessListener and nsIObserver

When a page starts to load the corresponding nsIRrequest object is added to a list. Stopping causes the request to be removed and the list to be checked for more in progress. If it's empty, the system waits a small amount of time in case any new page loads are forthcoming. This defaults to 20ms but can be configured arbitrarily as the "New load threshod". Once this time has passed and if the list is still empty, execution resumes with the next step.

This is the default method of load detection and entails implementing two different XPCOM interfaces. It uses nsIWebProgressListener to receive notifications about the state of the window, most importantly STATE_START and STATE_STOP.

XMLHttpRequest (AJAX) connections do not trigger this. In order to listen for these, we implement the nsIObserver interface and subscribe to the http-on-modify-request (treated as the start of the request), http-on-examine-response and http-on-examine-cached-response (stop) notifications. This is optional---perhaps the only XHR events on the page are ad-related and waiting for them would only slow things down.

On very rare occasions there are requests that fail to trigger the appropriate notification when they complete. There is an option to "Remove non-pending requests" that will be more aggressive at pruning the list of outstanding requests at a small risk of removing some before they start or while they are still waiting. See the nsIRequest.isPending() documentation for details.

nsIObserver only

Rather than use both interfaces, this method relies solely on nsIObserver. It uses the same three notifications as above but waits for all connections rather than only tracking XMLHttpRequest. This is simpler and may become the default in future. We've left the original approach intact to preserve behaviour of existing connectors and for more realistic performance in the builder. There is only one @mozilla.org/observer-service;1 for the whole browser which means running the connector in the builder while you have other pages performing requests may cause it to wait longer than necessary. In practice I find I have to have a few other tabs open and fairly actively making connections to even notice this effect.

Window event

When you're really only concerned about the document being opened and aren't interested in waiting for other frames, redirects or AJAX requests it is enough to wait for one of the events dispatched to the window object. The ones we have found useful for this purpose are configurable here: DOMContentLoaded, load, and pageshow.

Retrying

An alternative to waiting for the page containing the target object(s) to load is to simply keep attempting to access them until they become available. To this end you can configure the Retry step as an alternate following a Click, Set form value or other step that targets an element in the page. With the Alt checkbox ticked, the Retry step will only fire if the preceding step fails, allowing the task to keep running the element-requesting step until it succeeds.

A similar approach can be used to halt task execution until a page element has a particular value. Follow an Extract value step with an Assert that tests your condition. The alternative to run if that Assert fails can be a Retry configured to wait an appropriate amount of time and go back two steps causing it to extract the new value and test it again.

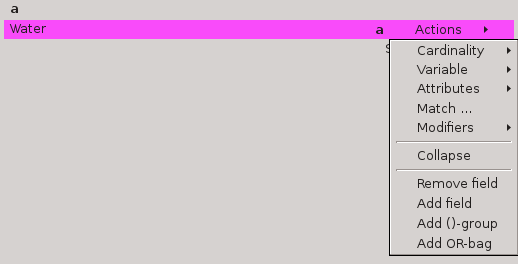

Xpattern

Grammar

The following defines the grammar of the XPattern:

<pattern> ::= <orlist> | <orlist> ':' <pattern>

<orlist> ::= <node> | <node> '|' <orlist>

<node> ::= <elementname> <opt-cardinality> <opt-assignment> <opt-modifierlist>

<opt-attrlist> <opt-childnodes> <opt-negation> |

'(' <pattern> ')' <opt-cardinality> <opt-assignment>

<elementname> ::= <name> | "any" | "#text" | "#comment"

<opt-attrlist> ::= "" | "[" <attrlist> "]"

<attrlist> ::= <attr> | <attr> "," <attrlist>

<attr> ::= "@" <name> <opt-relation> <opt-assignment> | <regexp>

<opt-relation> ::= "" | <compare> <value>

<compare> ::= "=" | "~"

<opt-modifierlist> ::= "" | <modifier> <opt-modifierlist>

<modifier> ::= "-html" | "-xml" | "-textcontent" | "-whitespace"

<opt-childnodes> ::= "" | "{" <pattern> "}"

<opt-assignment> ::= "" | "$" <name>

<opt-cardinality> ::= "" | <plain-cardinality> | <plain-cardinality> <nongreedy>

<plain-cardinality> ::= "?" | "*" | "+"

<nongreedy> ::= "?"

<regexp> ::= "/" STRING "/"

<value> ::= INTEGER | "\"" STRING "\""

<opt-negation> ::= "" | "!" <node>

<name> ::= HTML-friendly name, i.e. string starting with a-z followed by a-z0-9_. Match is case-insensitive.Whitespace is generally ignored

Regular nodes

The XPattern is basically a sequence of nodes, for example

BR : A $title : B $authorThe node names (BR, A, B) match corresponding HTML tags. Although the match is not case sensitive, traditionally the tags are written in upper case. The pattern above would match an HTML fragment like

<br/>

<a href="...">Some Title</a>

written by <b>Some Author</b> and return one hit, with a title and author. If there happens to be another segment like that on the web page, that will be returned as another hit. Note that the text "written by" is skipped, as it is not inside a matched node.

HTML tags can be nested to any depth. XPattern supports nesting too. Child nodes are indicated by enclosing them in curly brackets, as in

TR { TD $title : TD $author }which would find two hits in something like

<table>

<tr>

<td>The Little Mermaid</td>

<td>H.C. Andersen</td>

</tr>

<tr>

<td>Hamlet</td>

<td>William <u>Shakespeare</u></td>

</tr>

</table>Note that in the second example, the value returned for author would be "William Shakespeare". It does not matter that the tag contains other tags (in this case, U for underlining the last name).

if the pattern had been something like

TR { TD $title : TD $firstname { U $lastname } }it would have returned 'Shakespeare' as the lastname, and only 'William' in the firstname. But that would have missed H.C. Andersen completely.

Cardinality

XPattern can handle nodes that are optional, repeating, or both.

Optional: ?

A question mark indicates the node is optional.

A $title : I ? $author : B $year : Pwould find the two first hits (but not the last one) in something like this:

<a href="...">First title</a>

by <i> First author </i>

<b>1999</b> <p/>

<a href="...">Second title</a>

<b>1999</b> <p/>

<a href="...">Third title</a>

by <i> Author </i>

and <i> Another Author </i>

<b>1999</b> <p/> Repeating: +

A plus indicates a repeating node. There has to be at least one of them.

A $title : I + $author : B $year : Pwould find the first and third hit in the HTML example above, but not the second, as it has no author. The third hit would have two separate authors.

Optional repeating: * An asterisk indicates that a node is both optional and repeating. That is, there can be zero or more of them.

A $title : I * $author : B $year : PThis would find all three hits in the HTML example above.

Greediness: +? and *? By default all repeated patterns are greedy, meaning that they match as much as possible. Sometimes it is desirable to match as little as possible instead. This can also be much more effective, especially with ANY, which can try to match the rest of the document, before backtracking to only a few nodes.

As an example

A $title : ANY * : B $year would match one hit from the HTML example above, namely the first title and the last year. This is probably not what you want. A non-greedy match solves this problem:

A $title : ANY *? : B $year Now the ANY matches a minimal set of nodes, that is the author(s), and the B will match the first year. This way we get three hits from the same HTML example, each with a title and year that belong together.

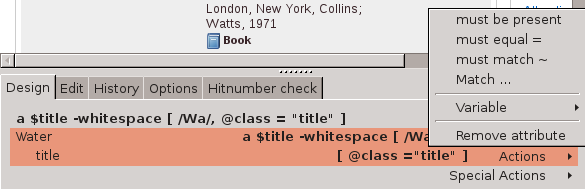

Attributes

HTML nodes can have attributes. Xpattern can collect their values into result variables, and it can require that a given attribute exists, and even that it contains a given value.

Attributes are enclosed in square brackets. They come after the cardinality and assignment.

Attribute names start with '@', as in XPath.

Collecting values

Often we want to extract the attribute value, for example the URL from an A tag.

A [ @href $url ]Matching attribute names

Some times we want to match only nodes that have a given attribute.

SPAN [ @highlight ]will match any SPAN that has a highlight attribute, no matter what value it has

Matchign attribute values

Often we want to match only nodes that have a given value in an attribute

SPAN [ @class="title" ]or with a regular expression:

A [ @href ~ "author" ]

A [ @href ~ "indexdata\.com/search\.cgi\?title=[A-Z]" ]Note how the dots are escaped with backslashes, so they won't match any other characters, like they do in regular expressions.

Text match

Occasionally it is necessary to match the content of a node, not the structure of the page. This can be done with the text match.

The text match is placed together with the attributes inside the square brackets. The match is case sensitive, and will match against the text-content of the whole node. It is a full-fledged regular expression.

Typically this is used for web pages that do not have enough structure, or that have multiple types of hits, when we only want some of them. For example

TR { TD [ /Book/ ] : TD ... }can be used to match only books from a table-formed page, where the first element tells what type of thing it is, as in

<tr>

<td>Article</td>

<td> ...</td>

</tr>

<tr>

<td><b>Book</b></td>

<td>Some-Author</td>

<td>Some-title</td>

</tr>Note that it does not matter if the TD element contains other tags, the match is against the full text content of it.

This kind of thing is especially useful when combined with alternative sub-patterns, as in

( TR { TD [ /[Bb]ook/ ] : TD ... } ) |

( TR { TD [ /Article/ ] : TD ... } ) Note that in the example above, the regular expression makes use of the character class to match both 'b' and 'B'.

Special nodes

ANY

As the name implies, the ANY node matches any kind of html node. It is useful for skipping uninterested parts of a hit, especially when modified by the non-greedy repeat *?

#text

Normally the XPattern collects the text from inside regular nodes. Occasionally it is necessary to descend to a lower level, and pick up a #text node directly. For example in

<span>

Some Author:

<i>Some title</i>

Lots of <b>description</b>

</span>This can be extracted with something like

SPAN { #text $author : I $title : ANY * $description }Note that since the description contains various tags, we use ANY * for it. This will be a greedy (maximal) match that will take the rest of the SPAN. The same result could be achieved with

SPAN $description { #text $author : I $title }where the whole SPAN goes into the description, except for the author and title. This is cleaner and probably more efficient way of doing it.

#comment

Very rarely it happens that the web page contains useful information inside a HTML comment

<span><!-- id 99999--> <b> Author</b>...This can be extracted with the #comment pattern

SPAN { #comment $id : ... }Since the graphical editor works on the level of tagged nodes, it can not highlight #text nodes. And certainly not #comment nodes, as they are not visible at all.

Modifiers

There are some modifiers that change the way XPattern matches and collects data.

-whitespace

The -whitespace modifier causes the XPattern not to clean up white space around and in the node, but to collect it all as it is.

-textcontent

Normally, the XPattern collects the text of the node, and then recurses deeper into the DOM tree to get the rest of the tet. The -textcontent modifier makes it collect the complete text content of the node and all its children. The XPattern still continued into child nodes, and may collect them into different $variables. This is useful if you have something like this:

<span>A collection of plays by <A href=...>William Shakespeare&</A> that everyone should know</span>By collecting the description with something like

SPAN $description -textnode { A ? $author }you get the author in $author, but your description will contain the whole text. Without the -textnode modifier, your description would be A collection of plays by that everyone should know, because the author was going into another variable.

-html

The -html modifier gets the HTML code of the node, with all tags, attributes, comments, and everything. This is useful in some rare cases. In the example above, using -html modifier on the SPAN would capture

A collection of plays by <A href=...>William Shakespeare&</A> that everyone should know-xml

The -xml modifier is almost like the -html, except that it produces a dump of the dom-tree under the current node. It should have all the nodes, comments, and text, but may contain a different kind of white space than the original source. It will also work for XML documents, which the -html modifier can not work on.

XPattern modifiers were introduced in version 2.18

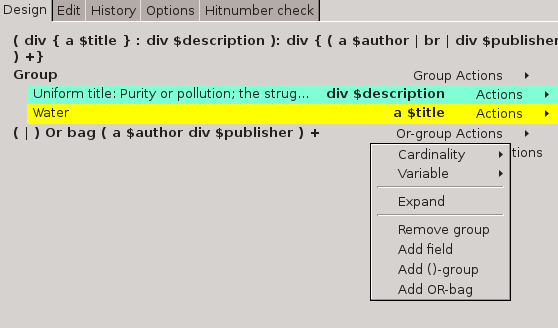

Groups and alternatives

Grouping in XPattern is indicated by (round) brackets, and alternatives by the pipe character '|'.

Alternatives |

Some web sites are not consistent in the way they present the results. For example, they can put the author before the title, or after it.

SPAN { B $author : A $title } |

SPAN { A $title : B $author }This will match either of the two alternatives. This is fine, when the alternatives are single nodes, as above (it does not matter that the SPAN contains a complex sequence of child nodes, itself is still just one node).

Or-bags

A common structure is to have a repeating group with alternative entries, as in

(

SPAN $author [ /Author:/ ] |

SPAN $title [ /Title:/ ]

) +This will match any sequence of authors and titles, no matter in which order they come. Sometimes it is useful to put a ANY in the end of the list, to skip those parts that we are not interested in.

Alternative groups

When the structure is more complex than above, it may be necessary to use alternative groups, as in

BR :

( B $author : A $title ) |

( A $title : B $author )Named groups

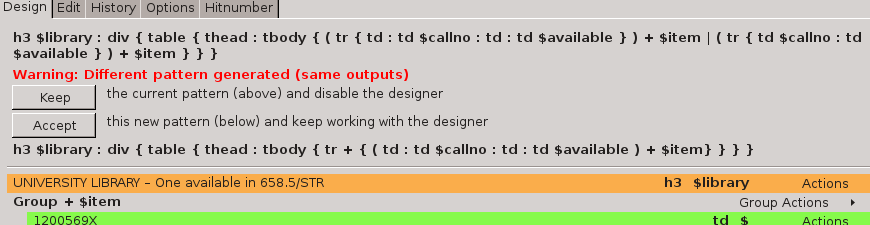

Named groups can be used to extract more structured information from a web page. A typical example is holdings information from a library catalog:

TR+ { (TD $callno : TD $available ) $holding }This will produce structured hits like the following XML:

<holding>

<callno>1234</callno>

<available>not yet</available>

</holding>

<holding>

<callno>6789</callno>

<available>real soon now</available>

</holding>Alternative XPatterns

Some sites show results in different formats, for example books in one way, and article journals in a completely different way. Then it may make sense to use an XPattern that combines the two separate alternatives into one XPattern like this

( SPAN { B $title : U $isbn } ) |

( SPAN { B $title : I $issn } )The designer will recognize such patterns, so you can get far with clicking and highlighting.

Negation

Some times you need to match a sequence of any nodes, as long as they are not bold. This can be done with

ANY + ! BHere is a more complex example

Suppose you have a page with bibliographic data coming in no special order. All you know that the title is always first, and everything is in plain text, separated by BR tags, like this:

Title: Master and Commander <br/>

Author: Patrick O'Brian <br/>

The first book in the series. <br/>

Captain Jack Aubrey, R.N., befriends Stephen Maturin, ship's surgeon and intelligence agent... <br/>

Publisher: W.W. Norton<br/>

Title: Post Captain <br/>

Publisher: W.W. Norton<br/>

Author: Patrick O'Brian <br/>

The second book in the series. <br/>

"We've beaten them before, and we'll beat them again." <br/>

In 1803 Napoleon smashes the Peace of Amiens...<br/>Since you know every hit starts with a title, you start your pattern with that:

#text $title [/Title:/] : brSince the rest can come in what ever order, you use an or-bag to collect matching lines

#text $title [/Title:/] : br :

( ( #text $author [/Author:/] : br )

| ( #text $publisher [/Publisher:/] : br )

) +So far so good. But you need the description too. If you just add a default line to the or-bag like this

#text $title [/Title:/] : br :

( ( #text $author [/Author:/] : br )

| ( #text $publisher [/Publisher:/] : br )

| ( #text $description : br )

) +things go horribly wrong. This pattern will match the whole page in one record, with multiple titles and authors. You need a way to stop the or-bag from matching the next title. Since neither the author or publisher lines will match it anyway, you need to put the stopper on the description. That's where the negation comes to its own:

#text $title [/Title:/] : br :

( ( #text $author [/Author:/] : br )

| ( #text $publisher [/Publisher:/] : br )

| ( #text $description ! #text [/Title:/] : br )

) +Now the description line will not match any #text (that is not matched above), except if that one happens to contain the word Title.

The negations are not restricted to single nodes:

TD { SPAN { B $title } } ! TD { SPAN { B {A} } }which matches a TD that contains a SPAN that contains a B, except if the B contains an A. Of course the same can be expressed more simply:

TD { SPAN { B $title ! B {A} } } Keyboard shortcuts

NB: On OS X we use Command instead of CTRL as per Firefox.

| Global | |

|---|---|

| Add step | CTRL-ALT-T |

| Add comment | CTRL-ALT-C |

| Next task | CTRL-ALT-LEFT |

| Previous task | CTRL-ALT-RIGHT |

| Next step | CTRL-ALT-DOWN |

| Previous step | CTRL-ALT-UP |

| Next test | CTRL-ALT-, |

| Previous test | CTRL-. |

| Run task | CTRL-ALT-G (go) |

| Run selected | CTRL-ALT-H |

| Show CF builder | CTRL-ALT-F |

| Show metadata editor | CTRL-ALT-M |

| Show step scratch pad | CTRL-ALT-P (pad) |

| Repo test | CTRL-ALT-E |

| Ninja test | CTRL-ALT-J |

| Step list and scratch pad | |

| Copy | CTRL-C |

| Cut | CTRL-X |

| Paste | CTRL-V |

| Delete | Del |

| Disable | D |

| Select sequence | hold Shift, use arrows |

| Select multiple | hold Ctrl, use arrows and space |

| New step window | |

| Show all steps | CTRL-A |

Tips and tutorials

My first connector (The AMNH Digital Library)

Let's build a connector!

Let's say that we want to make a connector for the online digital library of the American Museum of Natural History. The AMNH has digitized more than a century of publications and makes them freely available, so it's a useful resource to make available to metasearch systems.

Reconnaissance: searching the website by hand

Before we try to build the connector, we'll search the site using an ordinary web-browser to check that it supports the necessary operations.

Start by navigating to the the digital library home page at http://digitallibrary.amnh.org/dspace/

We notice that it's possible to do a simple keyword search from the front page, and that there is also a link to an advanced search page. To keep things simple, we'll start with the keyword search, and return later to the advanced search.

In the box below the caption Search by words or numbers, enter the query dinosaur. (In the radio-button group below, leave All Publications checked: later on we can treat the publications separately if we want to, but for now we will accept all matching documents from any of the listed publications.) Hit the Search button.

The search-results page page shows the first 10 of 85 hits. These are helpfully laid out in a table, each row giving the publication's date, its title including some citation information (with a link to a detail record), and the authors (including their birth and death dates in most but not all cases.)

Click through the first hit, Relationships of the saurischian dinosaurs. American Museum novitates ; no. 2181. The detail record includes more information about the resource and a link to a PDF of the full text. Return to the results list.

Now click on the next link at the bottom of the table containing the ten hits. This leads to a page that shows results 11-20. Click on this page's next link, and the destination page shows records 21-30.

All is as it should be. Now we can start to build the connector, which will perform all the same actions on our behalf that we just performed by hand.

Building the connector

Open the Builder sidebar, either by Selecting View -> Sidebar -> Connector Framework: Builder from the main menu, or using the shortcut Shift-Ctrl-C.

If this is the first time you've used the Builder in this browser session, then it will be set up ready to make a new connector. If not, you may have another connector already loaded: in this case, start a new connector by choosing Tools -> Connector Framework -> New from the main menu or clicking on the New Connector icon that it on the left of the sidebar's top toolbar.

In the main window, go back to the AMNH Digital Library home page. Now we're ready to begin.

No init task

Using the AMNH site does not require any authentication, so there is no need for an init task in this connector. We can get straight to work on telling the connector how to search.

The Search task

Make sure that the Current Task: dropdown near the top of the Builder sidebar is set to search -- if it's not, then change it.

The first step: going to the search page

The first step of submitting a search is getting onto the page where it's to be submitted from, so make a new Go to URL step. Click on the Add Step button -- the big plus sign that is the first button in the Steps toolbar about half way down the sidebar, and the Step Browser pops up, offering you a choice from among all the different types of step. Double-click on Go to URL (or click on it once, then hit the Add button at the bottom of the Step Browser).

Three things happen when you do this:

The Step Browser closes itself.

A new and empty Go to URL step is added to the task, and can be seen in the step list just below the Steps toolbar.

The step configuration pane leaps into existence, to the right of the sidebar and below the main window. This pane contains different controls depending on the type of the currently selected step.

For a Go to URL step, the important element of the configuration is the location to go to. This is presented as a textbox that a URL can be typed or pasted into, but since we are already on the right page, we can take the shortcut of clicking the Use current page button to the right of the textbox.

Clicking the button makes two things happen:

The textbox in the step configuration pane is filled in with the URL of the current page (i.e. the AMNH Digital Library home page).

That change in the state of the step is reflected in the step list over in the sidebar: the step's summary now contains the URL as well as the type.

The URL in the step list also changes if you edit the URL in the step configuration pane by hand. The step list always contains a summary of all the steps that make up the current task. Each step's summary consists of the step type, in bold, followed by an informative snippet of the configuration, which again is different depending on the type of the step.

You can always test a step by clicking on the Play button in the Steps toolbar: it's the right-pointing triangle that is fifth from the left. Since you're already at the AMNH page, navigate away to any page you like, and then hit the Play button. The builder will run the new Go to URL step, which will take you back to the AMNH Digital Library front page.

Setting the form value

Having loaded the search page, the next step is to type in the value to be searched for. To see that in action, we're going to need a value to test with, so enter the search term dinosaur as the keyword argument in the list of Test Arguments near the top of the sidebar. These test arguments are saved as part of the connector, although they are not used when running the connector in production: they are are purely for the benfit of the Builder and the developer using it.

Now we can add a Set form value step. Open the Step Browser and double click on the step name. The new, empty, step appears below the existing Go to URL step in the step list, and the step configuration pane changes to show the configuration of the new step.

Click on the Go to URL step in the step list and watch the configuration pane change to show the URL that was specified in the configuration for that step; click on the Set form value step in the list and the configuration pane changes back again.

In the configuration pane, the textbox labelled Form field to populate should contain an XPath specifying which element on the page is to be set to the specified argument. Rather than typing in an XPath, it's usually easier to use the node selector, and that's what we'll do now. Click on the Select node button. Now as you move your mouse around the contained web page, a dotted purple outline follows the mouse, showing which of the page's elements is being pointed to. Point at the search box and click: the textbox in the configuration pane is filled with a complicated XPath that designates the selected textbox.

Now we can test the new step. Hit the Play button and the word dinosaur appears in the search box.

When building a connector for a more complex search form, multiple Set form value steps will be used to set multiple values -- for example, separate author, title and date values.

Submitting the search

Having filled in the form, we need to submit it to the server. There are two ways to do this: with the Submit form step or with Click. We'll use Click.

As usual, open the Step Browser and double-click on the Click step. As usual, the new step is added to the list in the sidebar, and its empty configuration appears in the step configuration pane.

As with the Set form value step, this is configured by an XPath that indicates which element of the page to click; and as before we can use the node selector to do this. Hit the Select node button, and click on the contained page's Search button to fill in the XPath.

Now you can click on the Play button to check that the form submission step works as intended. When you do so, the contained page will change to show the first page of results.

Extracting and cleaning the hit-count

When a Z59.60 or SRU client sends a search request, the response has to specify how many records were found, so our connector has to extract this information from the results page.

To do this, we will need two steps: Extract value and Transform result.

Add an Extract Value step, and in the new step's configuration pane use the node selector to choose the text area that contains the text "Results 1-10 of 85." Run the step to check that it works correctly. Now for the first time we are using the Results area near the bottom of the Builder sidebar: the single result, called hits, has the value "Results 1-10 of 85." (i.e. the content of the nominated area of the contained page.)

Now add a Transform result step. This is one of the most complex and powerful of all the steps, but for now we can ignore the five fields at the top of the configuration pane and use one of the pre-canned recipes at the bottom. Click on the Last number button, and note that the configuration fields above are filled in. Hit the play button to check that the transformation has worked correctly.

In this case there is an interesting wrinkle: the Last number recipe pulls out the last sequence of digits, commas and periods from the value it's working on, so that it can work on decimals as well as whole numbers. This has the undesirable side-effect that the terminating period of the sentence "Results 1-10 of 85." survives the transformation. As it happens, this is good enough: the numeric value of the string "85." is 85, so we don't need to do anything about this.

Extra credit: getting rid of that terminating period

If you are the kind of person who likes things to be neat and tidy, you can remove the period by adding another Transform result step, setting the Regular expression to \.$ and leaving the replacement text empty.

Testing the whole search task

The connector's search task is now complete. To check that it does what it should, you can use the Play All button on the Steps toolbar: it's the one on the right, and it looks like the Play button with an additional vertical bar to the left of the triangle.

When you press this button, each step in the task is run in turn. You will see the contained site in the main window switch back to the AMNH Digital Library home page when the Go to URL step is run, then the word dinosaur appear in the search box when Set form value is run, then the form being submitted when Click is run, and the page will change to the result list. Then, too quickly to see the individual steps happening, the hit-count will be extracted and transformed.

While this is happening, the step configuraton pane is replaced by a log explaining what the connector is doing. This can be useful when debugging complex connectors. As soon as you click on a step in the list, or add a new step, this log is replaced once more by the configuration pane.

Congratulations, you have completed your first task!

The Parse task

Make sure that the Current Task: dropdown near the top of the Builder sidebar is set to parse -- if it's not, then change it.

Once a connector has obtained a page of search results, it needs to pick that page apart into separate records, and the records into separate fields, in order to have useful information to report back. The Connector Framework supports two separate approaches to parsing result pages: parsing by regular expression or by XPattern.

The regular expression parser works directly on the HTML of the results page. As a result, it is very powerful and general, but tends to be an absolute pig to work with. It remains an important tool to use as a fallback when other approaches fail, but it has for most purposes been superseded by the XPattern parser, which works at a higher level, dealing with nodes of the parsed page rather than with raw text. Because it works at this higher level, the XPattern parser is able to offer tools that help you to build a pattern, often very easily.

Once data has been extracted from the page, it can be cleaned up using transformatins like the one we used in the search task to tidy up the hit-count.

Initial parsing

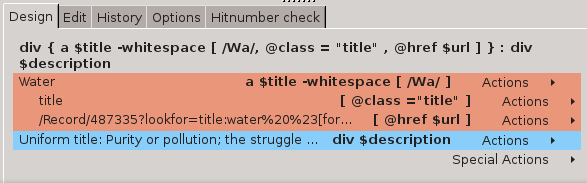

Use the Step Browser to add a Parse by XPattern step -- the first step of the new parse task. As usual, the step configuration pane appears. Rather than typing in an XPattern, we will use the Builder to help us create one: this is done by clicking on various elements of a sample record and specifying which field of the result record the values should go into.

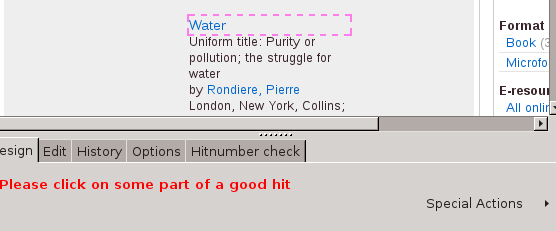

In the step configuration pane, click on the Start creating a pattern button. A new message appears in the configuration pane, "Please click on some part of a good hit", along with some buttons that we can ignore for now.

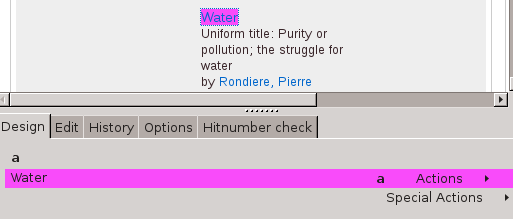

Do as the instruction says: click on the first interesting part of the first hit, the date "1964". Immediately this is highlighted, and an entry describing this field is added to the configuration pane, highlighted in the same colour. As well as the value, this entry contains a dropdown for specifying which field of the output record should be set from this part of the page. From this dropdown, choose date. We can ignore the other parts of the entry for now.

Click on the Add another node button below the newly created entry, and then on the next interesting part of the record, the title Relationships of the saurischian dinosaurs. American Museum novitates ; no. 2181". This is highlighted in a different colour, and a new entry is appended. From this entry's first dropdown, choose the field title.

This field in the results page has an important difference from the others: it is a link to the full-record page (which in turn has the link to the full-text PDF). We need to get at the full-record link so that it can be returned to the search client, so click on the title entry's Attributes button. Another line is added to the title entry, allowing an attribute from the title to be captured. In this case, we want the href attribute, which is selected by default, and we want to copy it in to url field: choose this fieldname from the dropdown after the going into caption.

Finally, we need to capture the author. Click once more on the Add another node button, then on the first record's author, "Colbert, Edwin Harris, 1905-", and set the fieldname in the dropdown to author.

Now we are ready to generate the XPattern. Hit the generate a pattern button at the bottom of the configuration pane, and the XPattern textbox will be filled in with a pattern describing the set of result-page fields and output-record fields we've nominated:

TD $date : TD { A $title [ @href $url ] } : TD $author

And now the magic happens. Click the Play button above the step list and gasp in awe at the parsed data that fills the Results area at the bottom of the sidebar! (You will need to resize the Results area in order to see more than one or two of the result records.)

Cleaning the parsed data

Looking at the records in the Results area, we can see that:

All ten records (numbered 0-9) have been correctly parsed out of the page.

The dates and titles are good (although we might later want to refine the titles by moving the citation information into another field).

The URLs are relative to the root of the website that hosts them rather than absolute.

There may be one or more authors, separated by semi-colons, and each author may have birth and death dates appended. Some authors are terminated with a period, some are not.

Cleaning URLs

Fixing the URLs is easy: add a Normalize URL step. There is no need to configure it: It Just Works. Click on the Play button, and all the URLs in the Results area will be transformed into absolute URLs that include the site name as well as the local path.

Cleaning authors

The authors are little more complex to deal with. Since the compound and irregular author strings we have are already useful, we will defer further work on these for now, and come back to them in the next lesson.

So we now have a complete and functional parse step, albeit one that we can refine later.

The Next task

Make sure that the Current Task: dropdown near the top of the Builder sidebar is set to next -- if it's not, then change it.

In general, a searching client will want more records than are displayed on a site's first results page. To support this, after having returned the first batch of result records, the Engine will repeatedly invoke the next and parse tasks to obtain records from subsequent pages.

For most sites, moving on to the next page of results is as simple as clicking a link, and that's the case here. Add a Click step to the new next task; click on the Select node button in that step's configuration pain, and then select the next link at the bottom of the contained page. Now hit the Play button on the Steps toolbar to verify that this step does indeed move on to the next batch of records.

Now that we have records 11-20 on screen, we can parse these using out existing task. Using the dropdown at the top of the Builder sidebar, select the parse task, and hit the Play All button on the Steps toolbar. Both the Parse by XPattern and Normalize URL steps will run, and the parsed result will be that the records in the Results are are replaced by broken-apart versions of those on this second page. (As a side-effect, the parsed-out regions of the contained page are highlighted: this can be useful when trying to work out why and XPattern is not doing what was expected.)

Refining the link

There is a problem here, though. Go back to the next task and run it again, and you will see that rather than stepping on to the next page of results, the site leaps straight to the last page.

That's because the XPath that the node selector genrated says "use the link number 9 in the table cell that contains those links", which works fine on the first page of results but not on the second, because different parts of the pages list are linked depending on where you are.

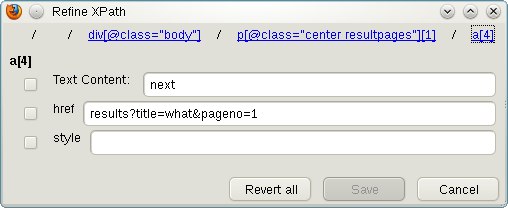

The solution is to change the XPath so that it always picks the next link. Go back to the first page of results, where you originally defined the Click step, and:

Click on the Refine xpath button in the step configuration pane.

In the popup, click on the component

a[9], which is the part of the path that specifies link number 9. (The previous components of the path explain where the relevant table cell is.)Of the three attributes of that next link that are displayed, choose Text Content, since that is the part of the link that identifies it as the right one. Check the box next to that caption.

Hit the Save button.

The XPath in the textbox is rewritten according to your modification, and now it is possible to step all the way through the result list by repeatedly invoking the next task.

Try it!

The connector for the AMNH Digital Library is now complete, at least in a primitive form. It could be used to provide searching for metasearch tools such as Masterkey. Save the connector by choosing Tools -> Connector Framework -> Save... from the menu, or using the Save button that is third from the left in the top toolbar. It's conventional to use a filename that ends with .cf -- for example, amng-diglib.cf

In the next session, we will refine this connector.

Refining the AMNH connector

We now want to refine the connector that we made for the AMNH digital library. There are two improvements that we might wish to make: supporting advanced search (i.e. searching specifically for an author or title); and cleaning the parsed data more fully. We will consider each of these in turn.

Advanced search

We noticed earlier that the AMNH site has an Advanced Search page. We can use this to make field-specific searching available to clients -- title, author and subject. (The site also supports searching for series, but the Builder does not have a search parameter for that, so we can't make use of it.)

To take advantage of the Advanced Search page, we'll make a second search task -- one that uses the title, author and subject arguments rather than the keyword argument. Once both search tasks are in place, we'll be able to tell which is which, when we pull down the Current Task dropdown, because one will be called search (keyword) and the other will be called search (title, author, subject) -- the Builder knows which parameters each task uses and, names the task accordingly.

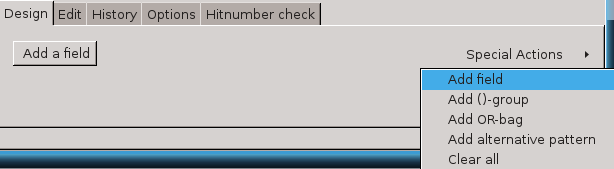

To create the new task, click on the Add Task button -- the large plus sign in the toolar at the top of the Builder sidebar. From the Add Task browser, double-click on search (or click on it once, then hit the Add button at the bottom of the Task browser). We need to add some sample parameters for testing, so in the Test Arguments area of the new task, let's set title to relationships, author to colbert and subject to dinosaurs. Now we're ready to start adding steps.

As before, we'll start by having the Builder navigate to the search page. Add an Open URL step to the new task, and set the Constant Location to http://digitallibrary.amnh.org/dspace/advanced-search.

The next part of the process is to populate the search form with the title, author and subject parts of the query. This can be awkward to do in search-pages like this one, where the choice of which fields to search is not statically determined but must be made by selecting from dropdowns on the search page. But in this case it's not so bad because there are three search fields available: we can set the dropdowns to constant values and use them to implement the three query parameters. If there were fewer search fields available, we would need to use conditional logic to determine at run time what selections to make from the dropdowns that control them.

Let's start by fixing the first dropdown to specify a title search. Add a Set form value step, hit the Select node button in the step configuration pane, and then click on the first of the three field-name dropdowns in the search page. When you do this, the Form field to populate field in the step configuration pane is filled in, and the dropdown itself opens up to offer the list of options. From this list, click on Title, and the step configuration pane's Populate with constant field is also filled in. Now test that the step works by manually changing that dropdown to one of its other values, then hitting the Play button to revert it to Title.

Next we need to set the value of the title argument into the form. Add another Set form value step, hit the Select node button in the step configuration pane, and then click on the first of the three entry boxes on the AMNH search page, the one next to the dropdown that we've set to Title. Now, in the step configuration pane, go to the Populate with task argument dropdown and choose title. Click on the Play button to verify that this step does indeed set the appropriate value.

What you have done for the title parameter in the Advanced Search page's first search field, you can now do for the author and subject parameters in the other two fields. Go ahead: add two more pairs of Set form value steps, and test them. (Somewhat confusingly, the value that the AMNH Digital Library's Advance Search page uses for field-selection dropdown when it's set to "Subject" is keyword. Don't worry, you didn't make a mistake.)

Finally, we can submit the search form. This is done exactly the same as for the simple search: add a Click action, Select node and hit the search form's Search button.

Test the new task using the Play button, and a single record should be found. This can be parsed using the existing parse step.

Tidying up the titles

The author strings that we extracted with the XPattern parser are rather ugly, and we can usefully do more work on them.

To get set up for this work, return to the original search (keyword) task, and hit the Play All button to re-do the search that finds 85 hits. Return to the parse task, and re-run it to get a set of results into the Builder.

Now we can get to work.

Splitting and cleaning author names

The first thing to do is split the author strings apart into separate authors: this is useful because when multiple values are returned separately rather than as part of a glued-together string, they can be used in facet lists.

Add a Split result step, and configure it as follows:

In the Result list dropdown, select

results.Set Result to transform to

author.Set the Regular expression to

;\s*(semicolon, backslash, lowercase letter 's', asterisk). This matches a literal semicolon followed by any number of spaces, tabs, etc.Set Result to set to

author, so that the split values will replace the existing author string. (Alternatively, they could be copied into a separate field.)

Running this step does not change the single authors at all, but splits compound author strings like "Brown, Barnum.; Schlaikjer, Erich Maren, 1905-" into multiple authors.

Removing trailing periods

We see that some author names have terminating periods, while others do not -- for example, in the field that was split in the previous step, "Brown, Barnum." ends with a period, while "Schlaikjer, Erich Maren, 1905-" does not. We can tidy this up with a Transform result step: add the step, set the Result list, Result to transform and Result to set as with the previous step (results, author, author), and set the Regular expression to \.$ (backslash, period, dollar). This matches a period at the end of a value only. Leave the Replace with field blank, since we want to replace the trailing period with nothing. Press the Play button to check that this step does indeed remove the period from the end of "Brown, Barnum.".

Removing birth and death dates

We now see that some of the author names have birth dates, or both birth-and-death dates, after them, whereas others do not. For example, the fourth record has three authors, Osborn, Henry Fairfield, 1857-1935, Brown, Barnum, and Lull, Richard Swann, 1867-. To remove these dates, we use a regular expression that matches a comma, followed by zero or more whitespace characters, then any number of digits and minus signs at the end of the string.

Add another Transform result step, as before with Result list set to results, Result to transform set to author and Result to set set to author. Set the Regular expression to ,\s*[0-9-]+$ and leave the Replace with string empty.

Press the Play button, and watch all the dates disappear from the ends of the author names.

Reversing the "Last name, First name" format

Now we have author names like Osborn, Henry Fairfield, Brown, Barnum, and Lull, Richard Swann. These are all in a consistent format within the AMNH Digital Library database, but normalised names in this last name, first name format are generall unusual, and so client software that uses these names to generate facet lists will not recognise that Osborn, Henry Fairfield in this database is the same author as Henry Fairfield Osborn in another. So in order to make our connector a better citizen in the metasearching world, we'll finish up by switching the names into the more common form.

For this, we will use yet another Transform result step on the author field (so set up Result list, Result to transform and Result to set as before). This time, we need a more sophisticated regular expression that captures both the surname and the forenames separately. Set Regular expression to (.*),\s*(.*). This matches and captures any sequence of characters, followed by a comma and zero or more spaces, followed by another sequence of any characters, which is also captured. Set Replace with to $2 $1, which simply emits the two captured substrings in reverse order.

Hit the Play button: all the author names are converted into conventional form.

Putting it together

Now that the parse task is complete, you can test it as a whole. Use the next task to step on to hits 11-20, then go back to the parse task, and hit the Play All button. All of the URLs and authors should appear correctly. in the Results area.

Is Javascript necessary?

Thanks to the popularization of unobtrusive javascript, progressive enhancement and graceful degradation as web development best practices, there is often the possibility that one can operate a web application with JavaScript entirely disabled. This is definitely a win as far as creating a connector is concerned: fewer files to load, fewer HTTP connections, less processing, etc.

When first evaluating a web site you plan to build a connector for, try operating it without JavaScript. It's important to check this early on: pages can offer quite different markup for their non-JavaScript versions and this will change the approach you take to developing the connector and the paths to the various elements within the site.

Rather than going back and forth to your browser preferences you can toggle JavaScript off and on by using the convenient green button with .js on it at the bottom right corner of any Firefox instance running the Builder. Upon determining where in the process to disable scripting, Set preference steps can be inserted to that effect.

Sites without hit counts

One of the most ubiquitous steps in any connector is "extract value", most frequently used to pick up the number of "hits" associated with an executed search. Without exception, this step must be included whenever result hit counts are available from a website.

Occasionally, however, websites do not provide hit counts for searches. Although a specific count is required for complete processing, we have coded a work-around for "no hit" situations, so that connector authors do not have to create hit counts out of thin air when target sites do not provide them.

Simply put, if there is no identifiable results hit count on the website, just ignore that step -- our software will provide an internal value so the connector will function properly.

The requirement for covering a "zero hits" situation -- where authors must provide a count of "0" for searches yielding no results at all -- is still valid, even if the site provides no hit counts for successful searches. Without including appropriate "zero hits" steps in the connector, it will fail nightly testing in the repository.

XPath refinement (making "Next" work on the 2nd page)

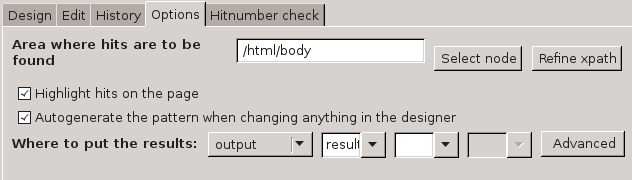

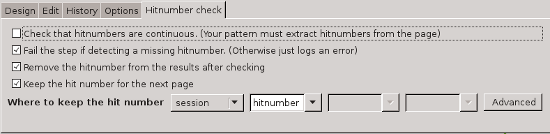

To use click, extract value, and some other steps, you need to choose a target for the action. When you click select node and move your mouse around the page, elements are highlighted with a dashed outline. The name of the element is also noted at the top of the window.